Building a recommendation system in Python – as easy as 1-2-3!

Are you interested in learning how to build your own recommendation system in Python? If so, you’ve come to the right place! Please note, this blog post is accompanied by a course called Introduction to Python Recommendation Systems that is available on LinkedIn Learning.

Welcome from Introduction to Python Recommendation Systems for Machine Learning by Lillian Pierson, P.E.

Read on to get a conceptual overview of recommendation systems and for a small Python demo (in the course, there will be MUCH more!).

Of course we’ve all heard about machine learning and recommendation engines in big business ecommerce. For quite some time, massive ecommerce businesses like Netflix, Amazon, and Ebay have been leveraging the power of data science to improve customer service and boost sales. Where once this technology was cost-prohibitive to all but the major players, recently things have changed. Thanks to multi-channel ecommerce platforms (think: Shopify), and the developers who are building custom machine learning applications, now mom-and-pop online businesses get the chance to infuse their operations with the power of data science.

In this article I’m going to explain to you how you can begin using simple statistical correlation, or more advanced machine learning approaches to build your own recommendation systems.

… you can begin using simple statistical correlation, or more advanced machine learning approaches to build your own recommendation system.

A recommendation system in Python, oh my!

To many, the idea of coding up their own recommendation system in Python may seem completely overwhelming. The good news, it actually can be quite simple (depending on the approach you take). Let me explain…

There are three main classes of recommendation systems. Those are:

- Collaborative filtering systems – Collaborative systems generate recommendations based on crowd-sourced input. They recommend items based on user behavior, and similarities between users. (An example is Google PageRank, which recommends similar web pages based on a web pages’ back links)

- Content-based filtering systems – Content-based systems generate recommendations based on items and similarities between them. (Pandora uses content-based filtering to make its music recommendations)

- Hybrid recommendation systems – Hybrid recommendation systems combine both collaborative and content-based approaches. They help improve recommendations that are derived from sparse datasets. (Netflix is a prime example of a hybrid recommender)

Collaborative systems often deploy a nearest neighbor method or a item-based collaborative filtering system – a simple system that makes recommendations based on simple regression or a weighted-sum approach. The end goal of collaborative systems is to make recommendations based on customers’ behavior, purchasing patterns, and preferences, as well as product attributes, price ranges, and product categories. Content-based systems can deploy methods as simple as averaging, or they can deploy advanced machine learning approaches in the form of Naive Bayes classifiers, clustering algorithms or artificial neural nets.

Exploring collaborative filtering approaches

Collaborative filtering systems come in two main flavors. Those are:

- Memory-based systems – These systems memorize training data. They often deploy correlation analysis, cosine similarity calculations, and k-nearest neighbor classification (showed in the demo coming up) to make recommendations.

- Model-based systems – These systems use (machine learning) models to uncover patterns and trends in training data. They often deploy Naive Bayes classifiers, clustering algorithms, or Singular Value Decomposition (SVD) methods.

Preping your computer to build a DIY recommendation system in Python

Now that you know what types of recommender systems are available to you and how they work, you could go ahead and start getting your hands (a little) dirty. Here I’m going to show you how to deploy a machine learning algorithm in Python (but of course, if you prefer, you can use R, WEKA or Octave for machine learning as well).

The first thing you need to do is to install the correct Python libraries and setup the programming environment. I suggest you keep things simple by just downloading and installing Anaconda on to your machine. To build machine learning applications you will need to install Python’s NumPy, SciPy, MatPlotLib, and SciKit-Learn libraries, as well as a solid Python programming environment. The reason that Anaconda is terrific is that, in one quick and easy install, it installs all of these libraries for you, as well as almost 200 other useful Python libraries and the Jupyter Notebook / IPython programming environment.

Getting started with a quick-and-easy k-nearest neighbor classifier

To get started with machine learning and a nearest neighbor-based recommendation system in Python, you’ll need SciKit-Learn. First start by launching the Jupyter Notebook / IPython application that was installed with Anaconda. Now, for a quick-and-dirty example of using the k-nearest neighbor algorithm in Python, check out the code below. This code shows how to use a k-nearest neighbor classifier to find the nearest neighbor to a new incoming data point.

[xyz-ihs snippet=”knn-similarity”]

Test it out for yourself. Play around with it a bit. And, congratulations – you have already made some good progress towards learning how to make your own DIY recommendation engine. If you’d like to receive updates from me on things that will enhance and expedite your career in data, be sure to sign up for my newsletter in the sign-up box below.

More resources to get ahead...

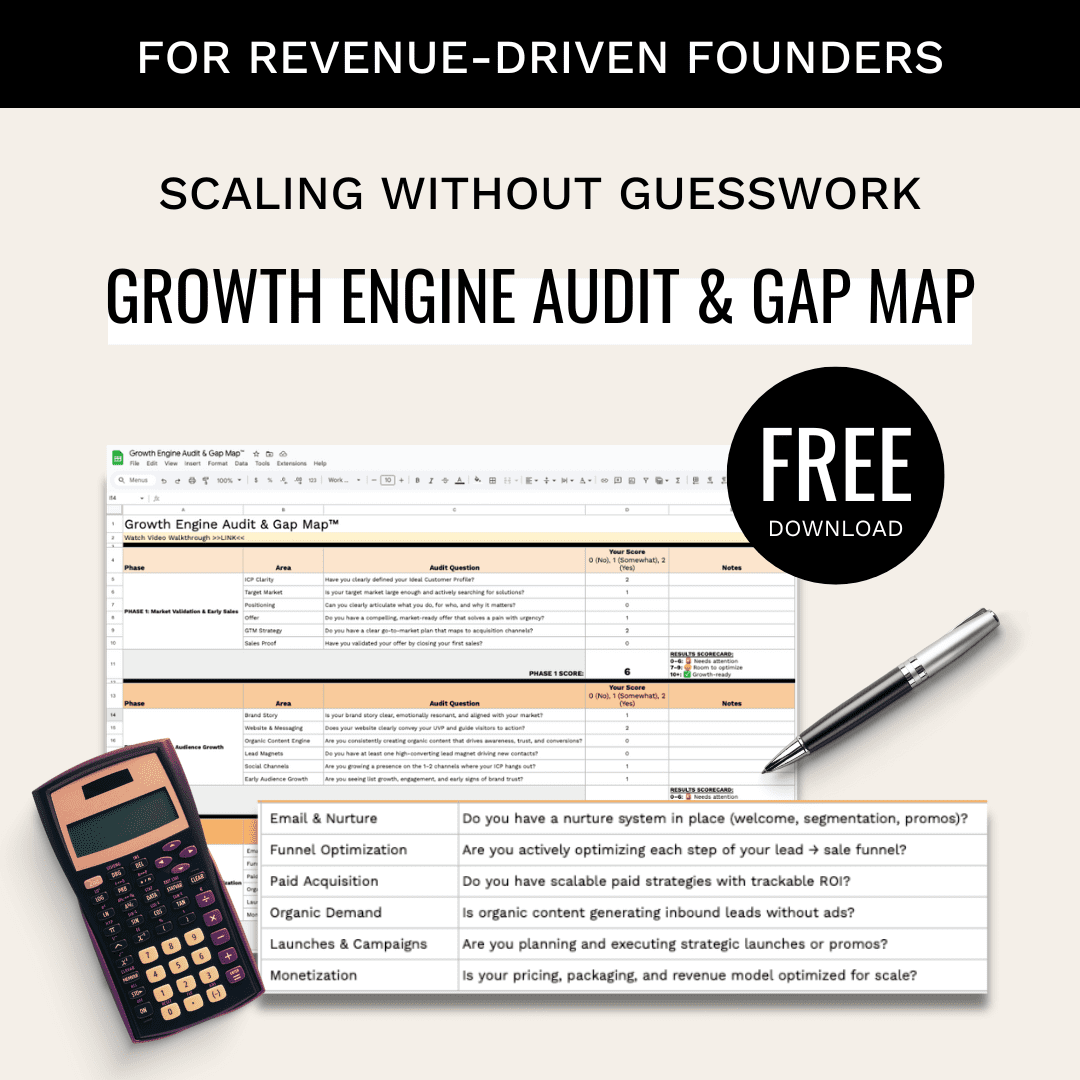

Get Income-Generating Ideas For Data Professionals

Are you tired of relying on one employer for your income? Are you dreaming of a side hustle that won’t put you at risk of getting fired or sued? Well, my friend, you’re in luck.